In an AI-powered future where predictability governs applications, companies that invest the most time in understanding their customers will gain a competitive advantage. Dramatic changes are occurring in product development as Artificial Intelligence transforms user interactions. UX researchers face new challenges in understanding and optimizing experiences with AI-enhanced products. As we integrate more AI and ML touchpoints, we must go beyond traditional UX research methods to ensure responsible AI development and address issues like bias and ethical considerations.

AI Models do many things in products, but they do not by themselves understand users beyond surface-level predictive responses. To truly understand the people using your product, there can be no substitute for traditional UX research -the best of which incorporates deep listening to build mental models for purpose-driven interaction. A language model guided by a well-defined mental model will predict better tokens with a clearer understanding of the interaction style and goals of the consumer. This is how product teams can and will make great products in the current age of AI models.

The Role of UX Research in AI Applications

Understanding the purpose behind incorporating AI is crucial for building a successful product, whether your application requires a general-purpose model or a specialized one. Artificial intelligence is reshaping the landscape of UX research by offering advanced tools for data analysis and process automation. However, the integration of AI into UX design demands a nuanced approach that combines these tools with traditional research methodologies and continuous improvement.

Targeted Feedback Mechanisms: Implementing targeted feedback mechanisms helps capture user experiences and perceptions of AI system outputs. This allows designers to understand where and how the AI's predictions deviate from user expectations. Where possible this should include

User-Centered Design Approach: By focusing on end-users' needs and expectations, UX researchers can help align AI model outputs with user mental models, improving perceived predictability.

Iterative Testing and Refinement: Utilizing methodologies like usability testing and A/B testing enables iterative refinement of AI models, making their outputs more consistent over time.

The Unpredictability Problem

In traditional applications, clicking a button yields a predictable outcome. However, interacting with an AI system can produce varied results even with the same input, posing unique challenges for UX researchers. This is a primary reason AI researchers consider observability and explainability key in understanding models.

Enhancing Predictability and User Trust

UX researchers play a crucial role in managing user expectations by designing interfaces that clearly communicate AI system capabilities and limitations. This transparency is vital for building trust and ensuring users have realistic expectations of what AI can achieve. While Data Scientists and AI engineers can control aspects of the model like temperature (output randomness) and top-k sampling (a technique that limits the selection of words to the most likely ones when generating text) they cannot with certainty control the model. Strategies like fine-tuning and Retrieval Augmented Generation combined with systems of guardrails can increase an application’s ability to constrain output, but observation is key to predicting and tuning these operations.

Key strategies include:

Expectation Management: Designing interfaces that effectively communicate the AI system's capabilities and limitations helps set appropriate user expectations for model outputs. UX and AI researchers should consider this a high priority.

Contextual Inquiry: Studying how users interact with AI systems in real-world contexts identifies scenarios where predictability is most critical, helping prioritize model improvements.

Error Impact Analysis: Developing metrics like the "Error Impact Score" quantifies the perceived impact of AI errors on users, aiding in prioritizing which types of prediction errors to address first.

Algorithmic Transparency: Providing insights into how AI systems make decisions to help users understand and trust the technology by understanding what data is used when and where in your customer journey.

Cross-Functional Collaboration

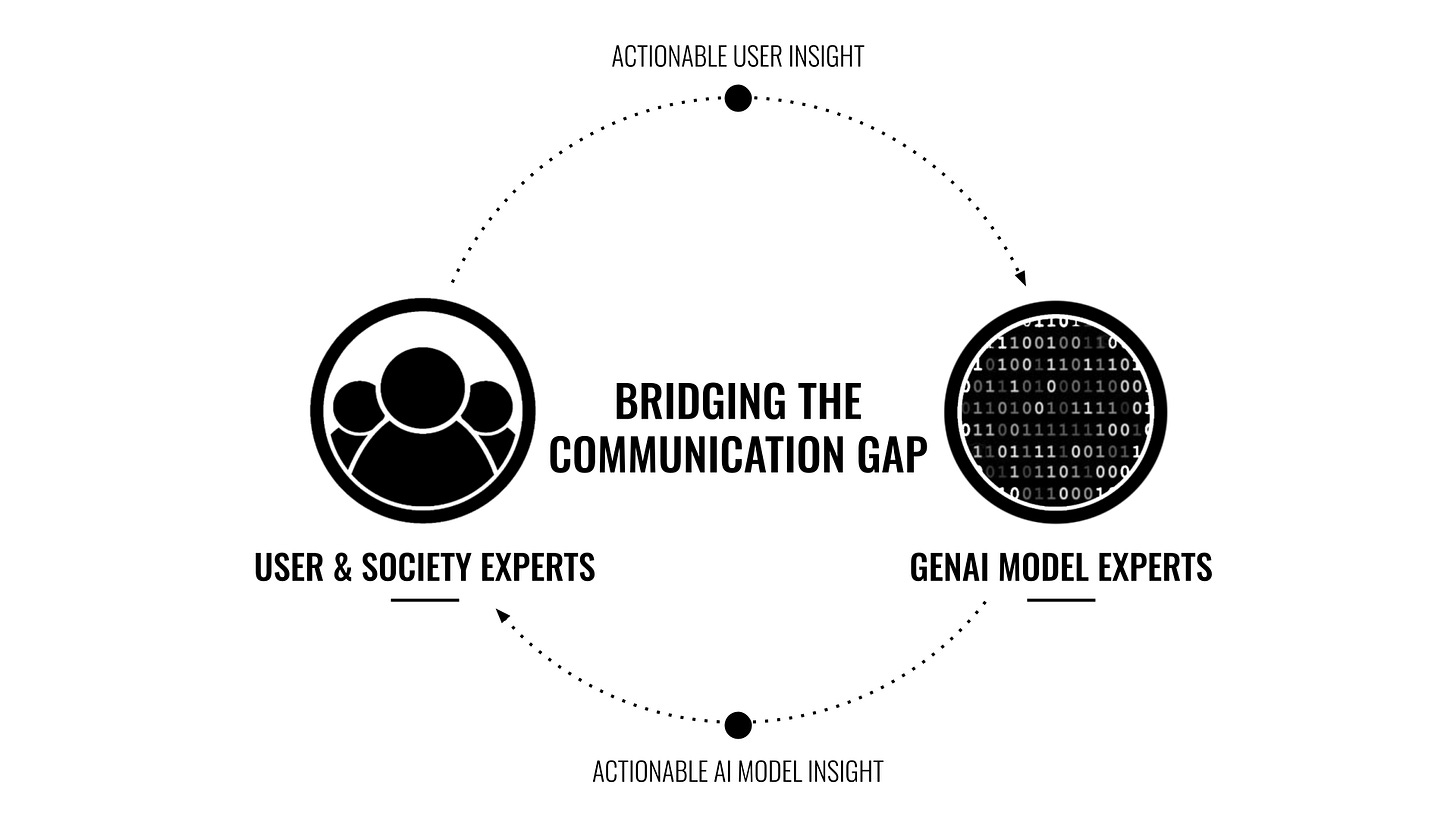

Collaboration between UX researchers, AI engineers, and end-users ensures that technical improvements in model predictability translate to meaningful improvements in user experience. This collaborative approach is essential for creating systems that align better with user expectations and needs. Bridging the communication gap between technical experts and user-centered design teams is crucial for responsible AI development.

Insights from Google PAIR

"One of our team’s core objectives is to ensure the practical application of deep user-insight into AI-powered product design decisions at Google by bridging the communication gap between the vast technological expertise of our engineers and the user/societal expertise of our academics, research scientists, and user-centered design research experts. We’ve built a multidisciplinary team with expertise in these areas, deepening our empathy for the communication needs of our audience, and enabling us to better interface between our user & society experts and our technical experts." .

Google's Responsible AI UX team emphasizes the critical importance of seamless communication across teams and disciplines in responsible product design. By bridging the communication gap between the technological expertise of engineers and the user-centric insights from academics and researchers, Google ensures that AI-powered product development is both responsible and aligned with user needs.

Limitations of Traditional Usability Metrics

Traditional usability metrics, such as task completion time, success rate, and error rate, are often insufficient for AI-driven products because they assume a deterministic interaction model. AI systems, however, can produce varied outcomes based on the same input due to their learning and adaptive capabilities

This non-deterministic nature requires new approaches to accurately assess usability.

New Concepts & Metrics for AI-Driven Interactions

Error Impact and Correction Rates

False Negatives and Positives: These are crucial in understanding where AI models fail to meet user expectations. Tracking these errors can help identify areas where the AI needs tuning.

Correction Rate: Measures how often users need to override or correct AI outputs, indicating potential misalignments with user needs.

User Satisfaction and Expectation Alignment

User Surprise Index: Captures instances where AI behavior deviates significantly from user expectations, providing insights into user satisfaction and trust.

Scenario-Based Accuracy: Evaluates AI performance across diverse scenarios to understand context-specific strengths and weaknesses.

Feature-Specific Satisfaction Scores:

Implement micro-surveys for individual AI-powered features, allowing users to rate their satisfaction immediately after interacting with specific functionalities.

Shifting Baseline Problem

Traditional UX research assumes a stable product behavior. However, nondeterministic AI systems change over time, making it difficult to establish consistent benchmarks. What works well during testing may perform differently in real-world scenarios. It is therefore imperative to document the conditions and time of reporting for all UX research.

The False Comfort of Averages

Many companies rely heavily on aggregate metrics like task completion rates. But for AI systems, these averages can hide critical issues:

A chatbot might have a 90% success rate overall

But completely fail to handle edge cases that affect vulnerable users

Or gradually drift from its original behavior over time

Here’s what this might look like in practice:

Error Impact Score

Instead of just counting errors, measure their psychological impact on users. A small mistake during a critical moment can be more damaging than several minor issues in less sensitive interactions.

Trust Resilience

How many mistakes can an AI make before users lose confidence? This varies by context — a music recommendation mistake has different implications than an error in AI-assisted medical diagnostics.

The Ethical Dimension

Here’s where things get even more complicated. Traditional UX research focuses primarily on usability and satisfaction. But AI systems raise additional questions:

How do we measure algorithmic bias in real-world usage?

When should AI systems explicitly acknowledge their limitations?

How do we balance automation with user agency?

A Path Forward?

While there's no perfect solution, several promising approaches are emerging:

Longitudinal Studies: Tracking user interactions over extended periods to understand how relationships with AI systems evolve.

Contextual Error Analysis: Going beyond simple success/failure metrics to explore the situational factors influencing AI performance.

User Expectation Mapping: Documenting not just what users do, but what they expect AI systems to do — and how those expectations shift over time.

Data Journey Mapping: Mapping the data touch points, including type of data, how it is used, where it is stored and what models/processes have access to it, in the same way we map a user’s journey.

Bias Mitigation Strategies: Implementing techniques to identify and reduce biases in AI systems, promoting fairness and inclusivity.

Responsible AI Practices: Establishing guidelines and best practices for ethical AI development and deployment.

Final Thought:

The deeper AI embeds itself into everyday experiences, the more evident the disconnect becomes between conventional UX research and the complexities of AI-human interactions. Though innovative methodologies are on the rise, mastering the measurement and optimization of these interactions remains a work in progress. Gathering comprehensive data and metadata on user interactions empowers us to enhance AI functionalities. By thoroughly understanding your product's context and the users' objectives within that space, AI UX researchers, Engineers, and Product managers can establish success metrics focused more on meaningful outcomes than on predictable, deterministic measures.